Is Trino the PostgreSQL of Analytics?

Exploring the Standard for Data-intensive Interactive Analytics

Sites like DB-Engines and Database of Databases list hundreds of active databases. But organizations are not keen to deploy a patchwork of databases when PostgreSQL offers a versatile, flexible, and open-source solution that can handle nearly any operational workload. As data architectures evolve, organizations are increasingly adopting a variety of analytical engines to process and analyze diverse data types. The emerging shift in mindset of using the best engine for the job, further fueled by open data layers, is leading to the proliferation of engines within environments. While this can offer flexibility, it also creates challenges in data governance, integration, query syntax variability, and performance optimization.

This begs a question: If PostgreSQL is the de facto standard for operational database management systems, could modern analytics platforms aspire to have a similar common standard, more specifically, can Trino be that standard?

Using a robust evaluation criteria, this report explores if Trino could be the open-source standard for data-intensive interactive analytics.

Current State of Analytics

Seismic shifts in the data space, such as open table formats that decouple storage and compute, are driving the rapid adoption of lakehouses. A lakehouse is a data architecture that combines the best of data warehouses and cloud data lakes, providing a centralized repository for both structured and unstructured data while offering scalable analytics and fine-grained governance.

The rise of lakehouses is largely attributed to the adoption of open table formats, such as Apache Iceberg, Delta Lake and Apache Hudi. Iceberg, in particular, has gained significant community and commercial traction due to its open platform, support for ACID transactions, schema evolution, and time travel capabilities. This enables organizations to manage and analyze large-scale datasets efficiently while maintaining data integrity and reliability.

Open table formats provide a metadata layer that manages information about tables, schemas, and partitions, opening up the data to be processed and analyzed by anyone in the organization with a compatible engine. With the removal of this lock-in, the center of gravity shifts away from proprietary engines and towards diverse analytical engines. However, this proliferation of analytical engines can also lead to high overhead, costs, and risks.

The ability to support an ever-growing set of analytical use cases is great, but it adds to the confusion on evaluating and selecting the appropriate engine. Businesses need to bring consistency across disparate engines. And, most teams do not want to spend their valuable data resources using multiple engines that require additional training and often lack interoperability amongst the engines and with the existing tech stack comprising data operations, security, monitoring, etc. This can lead to wasted productivity when the syntax needs to be manually translated so another engine can use the SQL.

The next section explores key requirements and features of the rapidly evolving space of modern analytics and how Trino meets stringent business needs. We examine how well open-source Trino meets the modern analytics tenets and whether it could be the standard. We draw parallels with PostgreSQL, to explore how Trino could become the preferred engine for analytics like PostgreSQL is for operational databases.

Let’s start by taking a look at Trino’s background before we define the key tenets of modern analytics.

Trino Background

Presto was born in 2012 at Facebook (now Meta) out of the need for a fast and distributed SQL query engine capable of handling large-scale data processing across various data sources when the organization had reached the scale limitations of Apache Hive. Presto quickly gained popularity within Facebook and was soon adopted by other tech giants like Netflix and Airbnb to query their data lakes.

In 2019, the original creators, including Martin Traverso, Dain Sundstrom, and David Phillips left Facebook to continue the development of the project independently, leading to the creation of PrestoSQL. This fork was driven by a desire to enhance the original vision of Presto, focusing on open governance and community-driven development.

In 2020, to avoid confusion and establish a distinct identity, PrestoSQL was rebranded as Trino. The Trino project continues to enhance the engine’s capabilities, adding features such as improved security, enhanced SQL functionality and performance, and broader support for connectors to federate data across various sources. With the rapid pace of the Trino project, many leading companies — such as Electronic Arts, Goldman Sachs, LinkedIn, Lyft, Netflix, Nielsen, Salesforce, and Stripe — rely on Trino.

Trino is a distributed SQL engine that delivers interactive query performance on vast datasets stored in lakehouses and other distributed storage systems. It has a thriving community of developers and users, and is leveraged in commercial offerings including Starburst, Amazon Athena, Amazon EMR Trino, and Azure HDinsight Trino.

The Trino Software Foundation provides governance and support for the project. It is an independent, non-profit organization with the mission of supporting a community of users and developers dedicated to the advancement of the Trino distributed SQL query engine. Its goal is to preserve the vision of high-quality, performant, and dependable software. The current board members of the Trino Software Foundation are Dain Sundstrom, David Phillips, and Martin Traverso. There are also numerous third-party tools and services that integrate with Trino to extend its capabilities.

Modern Analytics Key Features

While data-driven organizations often assess multiple query engines to meet their diverse analytics requirements, the intricacies often lie in the finer details. Figure 1 offers a comprehensive overview of the key pillars that define a modern analytical engine.

Let’s explore each area and see how Trino stacks up. We start with the use cases criteria to ensure that the analytical engine aligns with the specific needs and objectives of organizations’ real-world problems. Once an engine proves to be relevant, then we examine its other attributes, such as performance, reliability, open-source, ecosystem support, etc.

Use Cases

As previously mentioned, organizations aim to reduce the proliferation of disparate tools required to achieve their technical and business objectives:

- High-speed data ingestion. Ingesting data from one or more source systems into a data lakehouse.

- ETL/ELT: Extracting, transforming, and loading data into a lakehouse.

- Federated queries: Simplifying analytics that span multiple data lakes, and other data sources in a single query to deliver a comprehensive view of all data

- Ad-hoc analytics: Querying datasets that may be too big for traditional data warehouses, but are well suited to be in a lakehouse, at interactive speeds

- Petabyte scale batch & real-time streaming analytics: Building reports and dashboards on data warehouses, analytics platforms, and stream processing.

- Machine Learning and AI: Enabling exploratory data analysis (EDA) and data preparation for AI/ML model training.

Let’s see which use cases are best suited for Trino and which aren’t in its wheelhouse.

- High-speed data ingestion:

Although Trino wasn’t primarily designed for data ingestion, with the growing adoption of continuous ingestion from streaming systems, such as Kafka into open table formats, Trino has evolved to perform near real-time analytics on data formats, like Iceberg Tables. It can be integrated with streaming data pipelines to process incoming data and make predictions in near real-time.

- ETL/ELT:

Trino’s original purpose was to provide a massively parallel processing (MPP) distributed SQL query engine on data that has been ingested. It also provides a powerful and flexible way to transform data using SQL functions, expressions, and clauses to join data from multiple tables, clean, filter, aggregate, and manipulate data based on specific criteria in data lakes. However, long-running queries are susceptible to failures and hence need fault-tolerant mechanisms, like checkpoints and auto restarts to recover from unexpected incidents. In Trino’s fault-tolerant execution mode, intermediate exchange data is spooled and can be reused by another worker in the event of a worker outage or other fault during query execution.

Trino supports user-defined functions (UDFs) to create custom functions to perform complex transformations that are not supported by built-in SQL functions. It can also be embedded into ETL/ELT pipelines to perform data transformation tasks. For example, Trino connects to multiple data sources via a single dbt connection. The dbt transformations are passed to Trino which handles these SQL transformation queries and translates them to queries specific to the systems it connects to create tables or views and manipulate data.

- Federated queries:

Physical movement of data adds costs and overhead. Data federation reduces the complexity of data pipelines by removing the need for data movement. By leveraging connectors, Trino can seamlessly query data from a wide range of sources. Its federated query capabilities include joins and subqueries across multiple sources in a single query, pushdown optimization, and caching.

A unified semantic layer provides a single access point across multiple sources — which can also be used to support Data Fabric or Data Mesh strategies. Trino can be integrated with external tools like AtScale or Cube.js, which add semantic layer capabilities to the data stack by enabling a unified view and interpretation of data across sources.

- Ad hoc analytics:

Next, let’s shift our attention to how Trino supports a wide range of use cases, from ad-hoc analytics at interactive speeds to massive batch queries and high-volume applications with a latency that is in single-digit seconds in data lakes and lakehouses.

Trino’s native support for SQL enables analysts to run queries directly from BI tools like Tableau, Power BI, and Looker without additional connectors or custom coding. Because Trino connects to multiple data sources, users can perform complex queries across these sources in near real-time using its powerful MPP engine. This setup reduces data movement, simplifies workflows, and allows for faster insights, enabling a unified view of organizational data.

- AI/ML:

Finally, Trino supports AI/ML use cases in several ways starting from data discovery for EDA, data preparation and feature engineering for model training via its federation capabilities, and integration with popular machine learning frameworks like TensorFlow and PyTorch.

With the use of open table formats, the same data can be used forBI and analytics workloads as well as AI/ML model training.

Trino, much like PostgreSQL, has emerged as a versatile and powerful tool for general-purpose analytics. Both platforms offer a unified interface for querying data stored in various formats and on different storage systems. This flexibility allows them to support a wide range of use cases, from ad-hoc analytics to complex data processing tasks to ML model training and inference.

Trino is not designed for specialized use cases such as real-time streaming analytics that require latency in milliseconds. For such use cases, options include CelerData / StarRocks, StarTree / Apache Pinot, and Imply / Apache Druid

Performance and Scalability

Batch reports are no longer adequate. An analytical engine that supports low latency, high concurrency queries on disparate data sources can provide organizations with a competitive edge. But, let’s first define key performance and scalability requirements to handle large scale datasets and complex queries efficiently:

- Cost-based query planner: The query planner should not only help pick the best execution plan that minimizes file I/O and network traffic but also learn from previous execution runs’ statistics and automatically self-heal, when necessary.

- Query response time: Support interactive queries, even on large datasets (e.g., petabytes). A modern system should perform efficiently to generate answers on the fly, without relying on pre-calculated results.

- Concurrency: Handle multiple concurrent queries without significant performance degradation, and noisy neighbor problems are no longer acceptable

- In-memory processing: Processing data in-memory, using caches and columnar formats to enhance performance.

- Horizontal and vertical scaling: Provide automatic and elastic scaling by adding more nodes to a cluster to handle increased workloads or scale up by increasing the resources (CPU, memory) of individual nodes is a key requirement.

In the cloud with pay-as-you-go pricing models, faster performance typically is associated with lower costs and better user experience. In current times of economic headwinds, businesses are closely watching their infrastructure spend and performance costs to avoid running workloads on a lot of expensive hardware or overpaying without significant performance gains.

Trino uses a MPP architecture which was designed from the ground up for SQL processing on Facebook’s massive 300 PB lake. It breaks down queries into stages and executes them concurrently.

At a high level, Trino’s execution engine comprises:

- Coordinator: The coordinator creates and optimizes the SQL plan and then manages query execution and resource allocation. It can be thought of as the brain of the system.

- Worker: A Worker executes query tasks assigned to it from the coordinator and accesses data sources for processing. When multiple workers are used, as is often the case, the query execution happens in parallel across workers on different machines. Trino can efficiently scale performance linearly by adding more workers.

- Connector: Connectors provide the interface between Trino and different data sources. Common data sources include message buses like Kafka, lakehouses, operational datastores such as PostgreSQL, data warehouses such as Snowflake and Teradata, and non-relational systems such as MongoDB.

Trino leverages columnar data formats like Parquet, which store data for each column contiguously, enhancing query performance. It pushes down filter and projection operations closer to the data source, reducing data movement and improving query response times.

Similar to PostgreSQL, Trino prioritizes performance and scalability. Trino’s focus on distributed processing and scalability makes it a particularly well-suited option for analytical workloads that require high performance and the ability to handle massive datasets.

Open Source & Standards

Proprietary analytics engines don’t share their source code publicly and use a proprietary API (SQL dialect, type system, wire protocol, etc.), typically, leading to a higher total cost of ownership (TCO), a lack of flexibility, and depending on the depth of utilization can lead to resource and cost intensive switching costs. Organizations bought into these engines due to a lack of open-source alternatives or in some cases familiarity and comfort with the incumbent architecture they support (data warehouse) . For them to consider alternatives, key requirements to consider when evaluating open-source analytical engines include:

- Open standards: They give organizations theability to easily migrate from one stack to another without incurring costly and risky data, SQL, and application migrations. This is a significant development because thus far wider adoption of analytics has stagnated. With open standards, organizations are able to maintain more control while gaining future optionality and unlocking their disparate data sources to more use cases and users.

- Licensing: Not all open-source licenses are created equal. Some, like Linux Foundation, MIT, and Apache are more permissive than others and hence provide fewer restrictions on their usage.

- Community: A vibrant and engaged community is essential for ongoing development, support, and innovation. The community also includes an ecosystem of plugins, connectors, and tools that can extend the engine’s functionality and integration capabilities into existing investments.

Open-source ecosystem has finally arrived and is here to stay. From Parquet as the storage standard, open table formats such as Iceberg, Kafka, and Flink for real-time data streaming to analytical engines, like Druid, DuckDB, Flink, Pandas, Pinot, Ray, Spark, and Trino. Organizations finally have an opportunity to build a low-cost end-to-end architecture that is enterprise-class while avoiding vendor lock-in.

Being an open-source solution, Trino provides organizations with the flexibility to customize and extend the engine to meet their specific needs. The active community and continuous contributions ensure that Trino remains at the cutting edge of analytical processing. This rapidly growing community is led by its original creators, which drives continuous innovation and improvements. This community-driven approach has incorporated the latest advancements in data analytics technology as follows:

Just like open-source PostgreSQL has witnessed an explosion of commercial extensions, like Amazon Aurora, Google Cloud AlloyDB, the Trino community is also witnessing the same expansion. Three examples of this category are Starburst Galaxy, Starburst Enterprise Platform, and Amazon Athena. They all share the API of the open-source engine, which means users can seamlessly migrate their existing Trino workloads between open-source and commercial offerings without significant code changes. Some of the commercial enhancements include, Starburst’s Warp Speed, which keeps frequently accessed data in memory on worker nodes, to speed up query execution. Warp Speed is built on top of Trino without modifying Trino itself.

Both Trino and PostgreSQL are open-source projects that offer a unified SQL interface. This open-source nature provides organizations with the ability to build flexible, scalable, and cost-effective data solutions while maintaining control over their data and avoiding vendor lock-in.

User Experience and Ecosystem

A modern analytical engine should provide a clean, user-friendly, and intuitive experience with visual tools and drag-and-drop capabilities that simplify query creation and data exploration. In the world of AI, support for natural language queries can make it easier for users to interact with the engine.

Ecosystem is important. If the engine does not work with the tool users already use, they are less likely to use it. A modern analytics platform must offer connectors to various data sources, including relational databases, data lakes, and lakehouses. In addition, it must allow users to create custom connectors for new data sources and functions.

The engine should streamline user onboarding. Adopting open query languages like SQL can significantly contribute to this goal. SQL has been and continues to remain the lingua franca of analytics, in spite of being decades old. However, the majority of vendors implement SQL with their own twist which makes the SQL incompatible with other engines. Although most vendors support some version of ANSI SQL standards, customization seeps in when they add proprietary code to meet their specific needs.

Trino is highly compliant with the ANSI SQL standard, making it easy to integrate into existing data pipelines and applications. Its comprehensive SQL functionality includes advanced query capabilities, dynamic filtering, common table expressions (CTEs), windowing, and built-in functions for data manipulation and aggregation.

As mentioned in the previous section, Trino has a pretty robust ecosystem of connectors. Its plugin system allows developers to write their own connectors, security plugins, and functions to extend the system. This makes Trino very customizable without having to fork the code to add custom changes.

Also, as noted above, Trino is available across a strong portfolio of commercial offerings that provide users options to adapt the way Trino is leveraged based on the evolving needs of the business.

It should be noted that Trino does not natively support unstructured data sources. It provides a robust set of JSON functionality for semi-structured data. Beyond that, its plugin architecture allows for customer connectors and UDFs to process unstructured data.

Trino, like PostgreSQL, prioritizes user experience by offering intuitive interfaces and supporting various data sources. Trino’s plugin system allows for customization and integration with new data sources, while PostgreSQL also offers connectors to a wide range of databases and tools. Although PostgreSQL includes robust support for vector index, Trino does not yet support vector embeddings and the ability to do semantic search based on similarity.

Reliability and Deployment

Modern analytical engines must be flexible, scalable, and reliable to meet the demands of today’s data-driven organizations. They should be easily deployed in various environments, handle growing workloads, and ensure high availability and data security.

In the previous section, we explored user experience requirements and Trino’s capabilities. The user experience aspect also extends to DevOps engineers, who want the ability to automate data pipeline tasks like testing, versioning, deployments, and orchestration. These features help improve reliability.

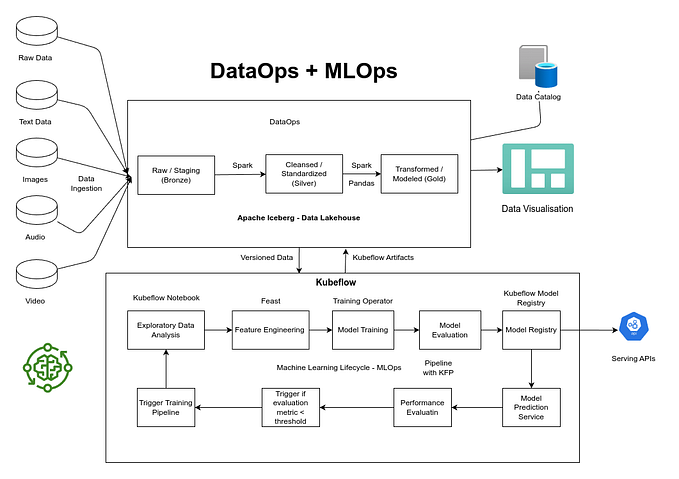

As mentioned earlier, Trino’s fault tolerant execution is being used for large scale transformations in the lake to prepare data for consumption by Ad hoc, BI, data applications, and ML/AI consumers. Users are using Trino to prepare their bronze, silver, and gold layers. And also to serve up the gold layer. Also worth mentioning the dbt integration with Trino enables analytics engineers to build their dbt models and let Trino power the transformation.

The Trino project provides a Docker image for quick provisioning and management with Kubernetes It is optimized for both on-premise and cloud environments, including AWS, Azure, and Google Cloud, making it adaptable to heterogeneous infrastructure setups. This scalable and flexible deployment approach allows for efficient resource management in various operational environments and eases the portability between on-premises and various cloud hyperscalers.

Trino’s deployment is seamless as it’s a Java program that can be run anywhere Java runs with complete freedom to customize it as per users’ requirements. Many of the largest organizations with complex infrastructure and security requirements use Trino.

Both Trino and PostgreSQL can be deployed in various environments, including on-premises and cloud, and are integrated with Kubernetes. Like PostgreSQL, Trino’s fault-tolerant execution and scalability make it well-suited for handling large-scale data processing workloads.

Security and Governance

Security and governance are critical aspects of modern analytical engines, especially when handling sensitive data. While this is a vast topic deserving of in-depth analysis, for this analysis, we’ll focus on the following key requirements:

- Access control: Granular control over who can access and modify data and enabling strong authentication and authorization mechanisms to prevent unauthorized access. In addition, it includes built-in capabilities to detect and prevent security threats, such as SQL injection and data exfiltration.

- Data encryption: Encryption of data at rest and in transit to protect against unauthorized access.

- Regulatory compliance: Adherence to industry standards and regulations (e.g., GDPR, HIPAA, PCI DSS).

- Lineage and audit logging: Tracking the province of data to ensure data integrity and quality and logging of user activity to track and identify potential security breaches.

- Metadata management: Centralized management of metadata to provide context and understanding of data and a single pane of glass to define and enforce policies.

Trino is used by some of the most security conscious companies, including Goldman Sachs and government agencies not only due to its built-in security capabilities but also because of its high degree of configurability to fit into most company’s security postures. Furthermore, the customization allows users to write their own plugins if the out-of-the-box capabilities don’t meet the organization’s needs.

Trino’s built-in security capabilities include:

- Supports various authentication mechanisms, such as Kerberos, LDAP, OAUTH, and HTTP Basic authentication. It also provides fine-grained authorization controls to restrict access to specific data and resources.

- Encrypts data at rest and in transit to help protect sensitive data from unauthorized access even if the underlying storage system is compromised.

- Creation of access control lists (ACLs) to control who can access and modify data. This ensures that only authorized users have access to sensitive information.

- Logs user activity, including query execution, data access, and system events. This helps identify potential security breaches and track unauthorized access.

- Includes built-in mechanisms to prevent SQL injection attacks, which can be used to compromise data security.

- Integrates with external security frameworks and tools to provide additional security features and protection.

Both Trino and PostgreSQL offer a high degree of security, making them suitable for organizations that handle sensitive data. Trino’s built-in security features, combined with its flexibility and integration capabilities, make it a strong choice for organizations seeking a secure and reliable data management platform.

Conclusion

Trino has emerged as a leading contender for becoming the open-source standard for data-intensive high-speed analytics, much like PostgreSQL has become for operational databases. It excels in key areas essential for modern analytics, demonstrating versatility in supporting a wide range of use cases from ad-hoc queries to complex ETL processes and AI/ML workflows.

Trino’s high-speed, low-latency querying on large datasets through its MPP architecture ensures impressive performance. As an open-source solution, it provides flexibility, customization options, and helps avoid vendor lock-in.

The platform boasts a robust ecosystem with an active community, extensive connectors, and various commercial offerings. Trino’s ability to scale easily, both horizontally and vertically, allows it to meet growing data needs efficiently. Furthermore, its strong built-in security features and customizable options make it suitable for organizations with stringent data protection requirements.

While Trino may not be ideal for real-time streaming analytics requiring millisecond latency, its strengths in data lakehouse queries, federated queries, ANSI SQL compliance, and integration capabilities make it a compelling choice for organizations seeking a unified analytics platform. As data architectures evolve towards lakehouses and open formats, Trino’s position as a versatile, open-source analytics engine positions it well to become an industry standard in the rapidly changing landscape of data analytics.